My Design Process

In the design phase of an instructional design project, it’s essential to ensure all activities and assessments in a training or course align with the objectives and serve a purpose.

For this reason, I use an alignment chart or curriculum map that follows William Horton’s Absorb-Do-Connect Model to intentionally map out activities where learners have opportunities to “absorb” new content, “do” or put it to practice, and then “connect” to it in some way. I like to combine this method with Bloom’s taxonomy and the principles of Universal Design for Learning (UDL). I do this prior to moving on to the development phase of building content, especially if I have a particularly large project and want to make sure that every activity and assessment has a clear purpose and that every objective has been covered with measurable outcomes.

Below is an example of an alignment chart.

Alignment-Chart2I also plan out the course by doing a design document, which includes many of the training details, such as seat time, flow chart for sequencing of topics, assessment decisions, and authoring tools or other technology needed.

CBT_Design-Document5As the project moves further along and to assess the course for effectiveness, I have a user and/or reviewer look at it for me using what I call a “usability and learnability checklist.”

Usability-and-Learnability-ChecklistCurriculum Development

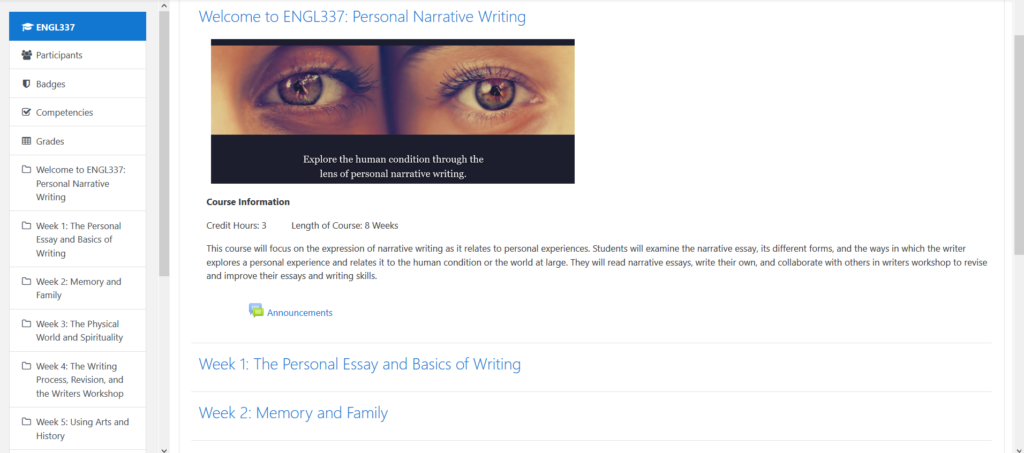

I have extensive experience developing curriculum. Below is an online college-level course I developed on personal narrative writing. I took it through the stages of curriculum development and to building the master course shell in a learning management system.

Below is the syllabus and a screenshot of the online course shell in Moodle learning management system.

Narrative-Essay-Syllabus

Assessment

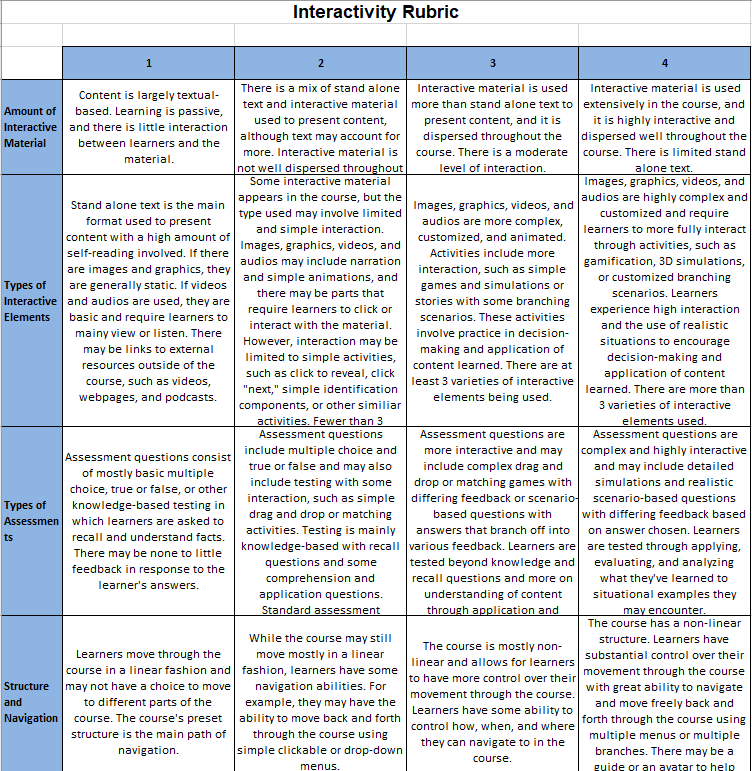

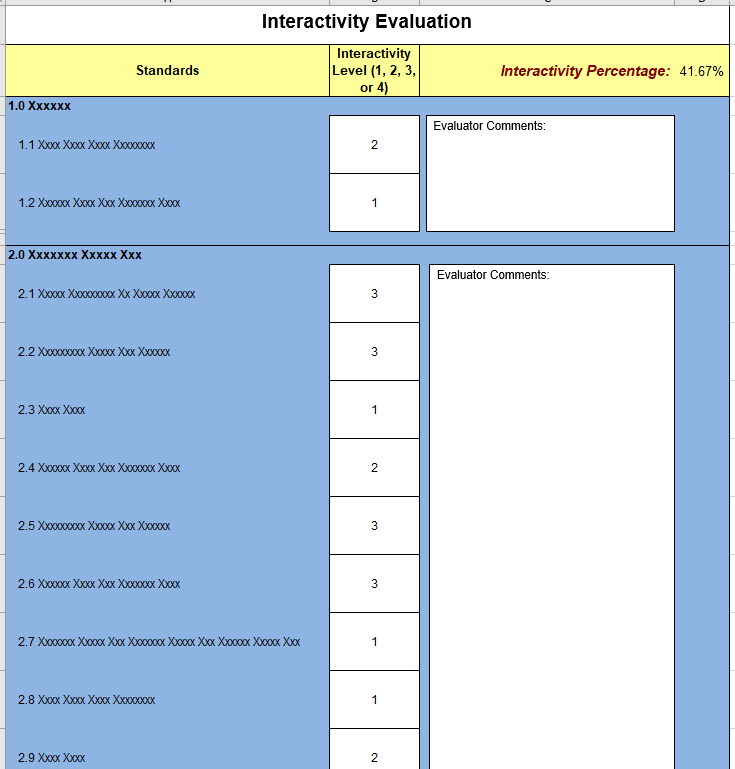

For this project, I helped an organization ensure that their online courses have interactive content for more engaged learning. It was determined that all online courses needed to meet a certain percentage for interactivity.

Therefore, my first task was to create a rubric that could be used by two groups of people at the organization to follow as a guide for interactivity expectations: course developers (who were tasked with creating courses) and course reviewers (who had to evaluate and approve of courses). My second task was to create an evaluation tool to help course reviewers more effectively assess interactivity on a course-by-course basis.

Below are screenshots of the rubric and evaluation tool. The rubric consists of four levels of interactivity and four criteria. The evaluation tool includes the standards or competencies for each course and an area for reviewers to enter in a score based off of the rubric, depending on how interactive the content was for that competency. I integrated a hidden formula that would then automatically calculate all of the scores into a final interactivity percentage. Due to the subjective nature of this kind of evaluation, my recommendation was to have three course reviewers separately evaluate each course for a more balanced review.